10-bit and beyond...

After reading through the preceding pages, I'm sure that many people are looking and saying, "What next?"The answer, as I'm sure you're not surprised about in the slightest, is already being hyped. Meet Deep Colour, True Colour's cousin. Deep Colour is exactly what it sounds like on the tin - any colour depth above 8bpc. That leads to anywhere between one and 68 billion possible colours for your viewing pleasure, which are contained in the new xvYCC colour space.

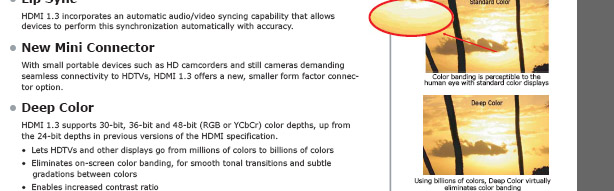

You can read about some of the ideas behind Deep Colour in the HDMI 1.3 brochure. Its purpose is primarily to further reduce banding in largely monochromatic situations (like large explosions, or bright sunlight), where subtle colour changes may not be so subtle as they dominate the screen.

It's important to note that you don't really have to go looking for Deep Colour on computer screens - 10bpc is not really in the cards at the moment. It's not that somehow a PC has less benefit than anything else from the change (indeed, one would think it would be more beneficial since you're closer to the pixels), but instead it's a software limitation. I mentioned on page two how DirectX 10 can utilise 32-bit colour formats, and that this gets "dumbed down" by Windows - this is an important point.

On PCs, whether using CRT or LCD monitors, your OS is forcing you down to 8bpc no matter what. It's hard coded into every OS, and can only be circumvented by using floating-point calculations, which can limitlessly expand the palette at the expense of some processing power. That's one of the reasons why there's not really a benefit to putting HDMI 1.3 onto motherboards and graphics cards at the moment - the bandwidth for Deep Colour is one of the main purposes, and current operating systems just don't allow for its use.

Colourful marketing

Before I close out this little treatise on colour depth, I need to interject something that sits between the border of fact and opinion. Remember when I mentioned that the eye is effectively a binary system of "off" and "on"? Well, here's where that comes into play. It is scientifically estimated that at best the human eye can discern approximately 30-bits of colour - there is simply a finite number of cone cells to trip.Now, that's a perfect eye - which, statistically speaking can only belong to a woman (as colour genes are carried on the X chromosome). Men in general have a lower sense of colour depth. Then, add on that a whopping one in ten human males suffers from some form of bad-enough-to-measure colour deficiency (the politically correct term for colour blindness), severely reducing the ability to see large portions of the spectrum with much fidelity.

The prevalent medical view is that the eye sees between 7-8b of red, a mediocre 5-6b of blue (we're considerably less sensitive) and a massive 10b of green. That means that well over two-thirds of the colours that could be rendered by even going up to a 10b display would not be seen, at least in theory. For an example, look at this image on an 8-bit display or CRT. It's made in 7-bit colour, providing 128 "steps" for each. You'll see that the red and blue both look like smooth gradients (note how the red bar is higher than the blue, as well) - but you will see banding in the green as it transitions to black.

Deeeeeeep colour.

That's not to say Deep Colour is useless - in fact, far from it. Our eyes are more sensitive to green and banding does happen even with 8-bit displays, specifically in more monochromatic situations with a lot of yellow or white light (which contain lots of green). And though Tim and I have gone round and round regarding how much is affected by what, there is no denying that 10bpc for at least the green would be largely within our visual range, most likely virtually eliminating any further colour depth issues. Many people may or may not notice the difference, but still - if the bandwidth is there, why not use it?

But we need to look beyond that. In reality, the solution to our colour woes won't likely happen from anything more than a 10-bit green channel that is provided with the first iteration of Deep Colour. So rather than putting all of this effort into going up to 12 and 16bpc, I think more effort should be put into the display technology itself - backlighting, as mentioned on the previous page, plays a pivotal role in how we view the colours that our panels can produce, as does the polarisation used to make LCDs function.

Now that we're starting to cross into 10bpc on TVs, we've hit the theoretical point of diminishing returns. We need to be enhancing the technology to let us use the bits per channel we have, because brute forcing more numbers through isn't going to help. If we want better colour fidelity, it's going to have to come from an evaluation of everything that makes up displays as we know it.

Conclusion

Well, I hope you've enjoyed this look into colour depth. At the very least, I hope it's been a bit educational, maybe you have picked up something you didn't know before. Colour depth isn't just that handy little number that you flip in Display Properties, bouncing between 16- and 32-bit to play "Spot the differences."No, colour depth is a lot, well, deeper than that. It's an issue that I've seen company after company shy away from as they produce our displays, hyping numbers that don't really change our viewing experience while neglecting ones that can radically alter how we look at our games, our pictures, and our digital lives.

On the flip side, there's others just dying to make a buck by going past the point of sensibility... 48-bit colour? At least as far as scientific theory goes, were already well past where we can get a benefit once we cross to 12bpc. At some point, we have to look beyond the "bigger equals better" mentality and fix the other aspects that are destroying the display fidelity.

And once we reach that point, maybe operating systems will start to catch up as well, bringing it to computers near you.

Editor's Comment:

I don't often add another perspective to other writer's articles, but this is an exception because neither of us could agree on how higher colour bit depths could benefit the end user - I think that is a testament to how wide open this topic really is. Therefore I felt that it was worth me chiming in with another perspective on how I believe our eyes differentiate colours. I believe that there will be benefits to higher bit depths in certain situations, but not all - allow me to explain.From what I understand after many discussions, Brett's perspective is that the amount of colour the eye can detect is an absolute value, while my perspective is that it is not an absolute value. By this I mean that you should be able to get much finer gradients of colour when you're making small transitions.

For example, if you've got a screen that's 1920 pixels wide and the majority of the screen is filled with a gradient of one colour in particular. Think of looking right at the sun, or there's a big explosion on the screen - the picture is mostly washed out with one colour and in order to prevent banding, you want as many steps as possible from the brightest to the darkest part of that colour. If you have less than 1920 different colours available for the spectrum of colours on the screen, you're going to see banding regardless. If there are more than or equal to 1920 shades of that colour available, you will not see banding on the screen.

The eye can only see the amount of colour that Brett refers to at any point in time - it's not an absolute value. Think of it like a floating point calculation in 3D graphics - there is a set amount of colour your eyes can determine at any point in time, meaning that with small transitions of colour, you can see more steps of the same colour.

However, when you're making large transitions in colour you're still limited to the same range (i.e. the 10 bits of green, 7-to-8 bits of red and 5-to-6 bits of blue that Brett talks about), which means that the number of steps doesn't change, but the number of transitions does. You're obviously not going to see as much benefit (if any) in scenarios such as this one.

- Tim

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.